Minimizing Artificial Intelligence Risks

Employer Insights, Industry News

Generative artificial intelligence (AI) tools seem to have taken the world by storm. As with any aggressive technological change, adoption has outstripped the implementation of security policies in many organizations. With employees eager to take advantage of AI tools, it’s important to introduce policies and education to govern these tools and ensure that team members are aware of the risks and benefits of using them. Here are some things to keep in mind when you are working to minimize AI risks:

Intellectual Property and Private Information

A common use of AI tools is the creation of content such as articles, posts, art, and more. With the popularity of such tools, it’s important for employees to understand the risks that they can pose even when using them for seemingly innocuous tasks. One of these risks is that by submitting information to generative AI applications, a well-meaning employee may inadvertently disclose proprietary information, the company’s intellectual property (IP), or private information belonging to an individual. The danger is that these tools may use or retain information submitted by users, so it’s important to ensure that confidential information is not released to AI tools.

On the other end of the spectrum, AI tools may already be utilizing intellectual property without proper permission or attribution. Utilizing content generated by an AI tool that has improperly used someone else’s IP could put the company at risk of violating IP rights. Several tools have emerged to help check AI-generated content for plagiarism, so these could help mitigate this particular risk.

Bias and Misinformation

Generative AI technology relies heavily on the information it has been trained on. Because of the nature of AI, some tools may have an inherent bias due to the dataset used to train it. People using AI tools need to be aware of the potential for bias and actively look for ways to mitigate it wherever possible.

Similarly, AI technology may sometimes produce content that contains incorrect information, so it’s important to fact-check content produced by AI to ensure that it is correct. This can be a liability issue for an organization, so it’s imperative that employees utilizing AI tools understand the risks, remain alert to misinformation, and know how to evaluate content to ensure quality.

Cybersecurity Risks

Because the adoption of AI technology has happened rapidly in many organizations, security policies may not fully address these tools. To ensure the safety of the business, it’s important for IT teams to get ahead of this when possible and for the organization to provide education about artificial intelligence. Educating team members on vigilance, data safety, and other potential issues with generative AI technology goes hand in hand with embracing these tools.

In addition to these risks, AI tools are increasingly being used by cybercriminals and bad actors to launch sophisticated attacks on organizations. This includes more advanced social engineering and phishing/smishing campaigns as well as hacking. Team members who are aware of these possibilities and know what to do in the event of an issue will be better prepared to help protect the organization.

The Future of AI

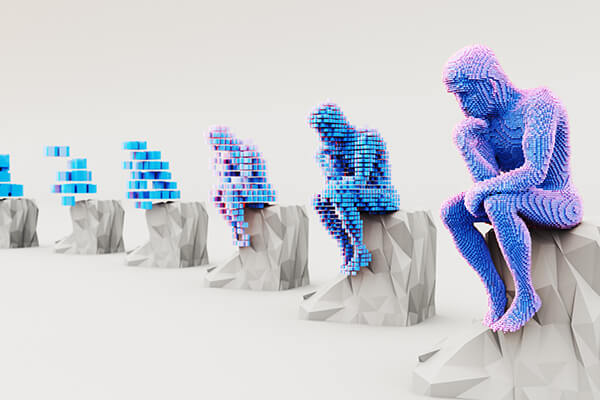

The outlook for artificial intelligence looks bright as adoption has picked up and more advanced tools are released to consumers. In the meantime, organizations must weigh the benefits and risks in order to ensure that these tools are used correctly to benefit the business without exposing it to unnecessary risk. As the AI landscape continues to evolve, it will be interesting to see what new frontiers develop.

Looking for AI Experts?

Whether you’re building your own AI tools or looking for cybersecurity experts to keep your organization safe, we can connect you with the technology solutions and talent you need. Get in touch today to learn more about how we can help.

Share This Article

Contact Us

We’re here for you when you need us. How can we help you today?